Integrating 4D Gaussian Splats into Omniverse and Isaac Sim

Progress, Challenges, and What Comes Next

At Evercoast, we’ve been working to integrate our 4D Gaussian Splatting data into NVIDIA’s Omniverse ecosystem, with a focus on testing inside Isaac Sim. Static splats — single-frame Gaussian Splat representations — are already gaining traction in the Omniverse community for use in photorealistic scene capture and environment scanning. These are well-suited for static assets and environmental reconstruction.

Our focus, however, is on time-sequenced splats: dynamic, multi-frame volumetric video that captures human motion and real-world interactions with high fidelity. We’re exploring how well Omniverse and Isaac Sim can support this type of data for simulation, robotics, AI training, and digital twins.

Note: The observations and limitations described in this post are based on our internal testing with 4D Gaussian Splats in Omniverse and Isaac Sim. While consistent across our experiments, they may not reflect formal platform constraints or documented behavior.

Why 4D Gaussian Splats?

4D Gaussian Splats are a powerful way to represent real-world motion and appearance in volumetric form. Unlike traditional meshes or skeletal motion capture, splats preserve full surface and appearance detail, including soft tissue, fabric, hair, and complex lighting. They are time-sequenced, view-dependent, and inherently photorealistic.

While current implementations are not lightweight or performance-optimized, their value lies in fidelity. For simulation scenarios that demand realism — especially for perception-based AI and robotics training — splats offer a level of visual ground truth that other representations struggle to match.

Context: Omniverse and Isaac Sim

Omniverse is NVIDIA’s collaborative 3D platform for real-time simulation and design, built around the Universal Scene Description (USD) standard. Isaac Sim is a robotics simulation application built on Omniverse, providing tools for simulating sensors, environments, and robot behavior.

Our work has focused primarily within Isaac Sim, where accurate simulation of sensors and environments is critical. The question we’re exploring is whether 4D Gaussian Splats can become first-class citizens in that ecosystem — usable not just as static visual assets, but as integrated components in simulation, rendering, and sensor workflows depicting dynamic scenes and situations.

What We Found

1. Physics and Collision

Splats are not mesh-based and do not have explicit surface geometry. As expected, they cannot interact with physics engines in Isaac Sim out of the box. Collisions, dynamics, or physical presence would require supplemental geometry such as bounding boxes or proxy meshes.

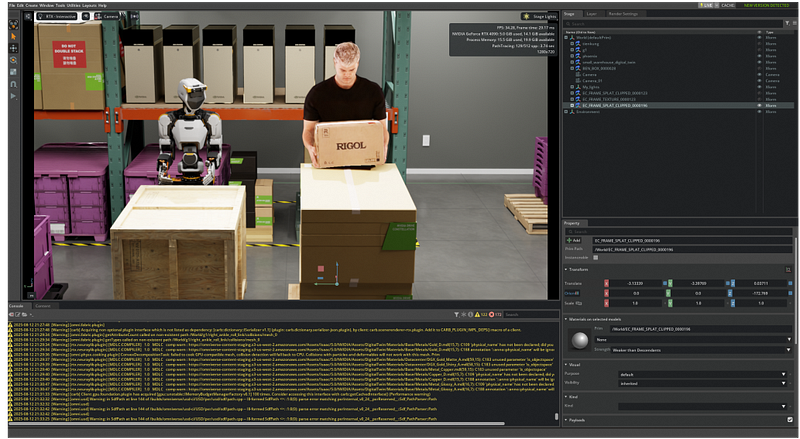

Physics collisions for the default physics scene in Omniverse, with a splat and a mesh imported.

2. Sensor Integration (LIDAR and Visual SLAM)

Using the Carter robot in Isaac Sim, we tested whether splats are visible to simulation sensors. We’ve found that splats are not detected by LIDAR sensors, meaning they do not appear in depth scans or point clouds generated by non-visual sensors.

However, RGB cameras used in visual SLAM pipelines can see the splats, and we were able to extract sparse point clouds based on visual data. This suggests splats are usable in vision-based workflows but are currently invisible to depth or range-based systems.

Isaac Sim Carter Robot NVBlox Lidar example, showing the lack of depth computation for an imported splat.

3. Temporal Playback

We constructed a simple time-sequenced playback setup by manually keying the visibility of individual .usdz splat files. This worked for basic testing, but is not scalable. Current formats like .usdz do not natively support multi-frame sequences. A standardized approach for loading and playing splat sequences would greatly improve usability within both Omniverse and Isaac Sim.

Isaac Sim Carter Robot Visual SLAM example, showing the sparse point cloud representing the imported splat.

4. Rendering and Alpha Blending

When splats overlap or intersect with translucent materials, we observed rendering artifacts. These include hard edges and incorrect occlusion behavior. This likely stems from billboard-style rendering, where splats are rendered as 2D planes with depth-based sorting. Because they are not composited volumetrically, blending with other geometry — especially transparent objects — produces visual issues.

Manually keyed splat sequence for temporal playback

5. Shadows and Reflections

Splats do not currently cast or receive shadows and do not appear in reflections. Splats also cannot blend together when overlayed in the camera view, despite different world positions. This further reinforces the idea that they are treated as flat visual cards rather than volumetric content. This limitation restricts their use in photorealistic or physically accurate environments where lighting interaction is essential.

Interaction of a cube, casting shadows, over a mesh and a splat sequence. The mirrored floor shows no splat reflection, and two splats interacting shows billboard rasterization artifacts.

What This Could Enable in Omniverse and Isaac Sim

With deeper support, 4D Gaussian Splats could enable several high-value use cases within the NVIDIA simulation ecosystem:

Sensor fidelity for AI perception: Splats could become visible to LIDAR, depth, and multimodal sensors, enabling synthetic data generation with realistic occlusions and surface interactions.

Photorealistic digital twins: Time-sequenced splats could represent human activity inside complex digital twin environments with visual accuracy far beyond rigged meshes.

Vision-language and scene understanding training: 4D splats provide a unique foundation for AI models to learn from temporally coherent, spatially rich representations of the real world.

Simulation replay with real-world data: Captured 4D content could be dropped into simulation scenes for replay, annotation, or interaction with virtual agents or environments.

To unlock these possibilities, splats must be treated as more than static assets. They need full integration into Omniverse’s rendering, sensor, and simulation systems, with proper handling of time, transparency, and light interaction.

Where We Go from Here

This work is ongoing. We’re building tools, testing edge cases, and continuing to evaluate the role splats can play inside Isaac Sim. The questions we’re exploring include:

Can splats be made visible to LIDAR and depth sensors?

Will there be native support for time-sequenced playback in USD pipelines?

Is there a path toward volumetric rendering with full shadow, reflection, and transparency support?

We’re excited about what’s possible and will continue to share what we learn. If you’re working on similar challenges in Omniverse, Isaac Sim, or volumetric content pipelines, we’d love to connect.