Evercoast's RED KOMODO Spatial Capture Rig

Documenting Evercoast's findings on using RED KOMODO cameras for high-fidelity volumetric capture.

Introduction

Evercoast conducted a production to explore the viability of using RED KOMODO cameras for high-fidelity volumetric video capture.

The goal of this project was to validate that RED’s cinematic image quality, paired with Evercoast’s advanced Gaussian splatting reconstruction and playback pipeline, could produce photorealistic 4D human performance captures suitable for certain core markets that are important to both RED and Evercoast, including:

Film & Television Production

Visual Effects (VFX)

Virtual and Augmented Reality Experiences (XR)

Games and Interactive Content

While Evercoast’s platform has long supported professional multi-camera capture using its Mavericks software for camera control, recording, and live preview, this test was deliberately built using an ad hoc hardware setup. The intent was not to design a production-ready system, but rather to answer key questions about performance, workflow, and visual quality before deeper integration.

Purpose of the Test

This project was designed as a technical validation, not a final product deployment. The objectives included:

Image Quality Evaluation: Confirm that KOMODO cameras provide the fidelity and dynamic range desired in high-end volumetric rendering using Gaussian splats.

Multi-View Synchronization: Validate synchronization across 36 cameras to ensure perfect frame alignment during high-motion capture.

Workflow Analysis: Identify bottlenecks and opportunities to improve speed and efficiency before fully integrating RED cameras into Evercoast’s technology stack.

Optimizing Camera Count: While 36 cameras is substantial, it is far fewer than the 100+ cameras often seen in traditional volumetric rigs. Our goal was to strike a balance between production practicality and visual quality, testing the limits of reconstruction efficiency.

Future Integration Planning: Gather insights to guide the next phase, which will leverage RED Connect for IP-based streaming and Mavericks for seamless control, recording, and preview.

Benchmarking Against RealSense: Compare capture quality between a 29-camera RealSense D455 array and the 36-camera RED KOMODO array. While we knew the KOMODOs would produce superior imagery, this test was intended to quantify how much better the results were.

This project provided essential learnings to inform a next-generation production workflow that removes much of the complexity seen in this initial rig.

System Overview

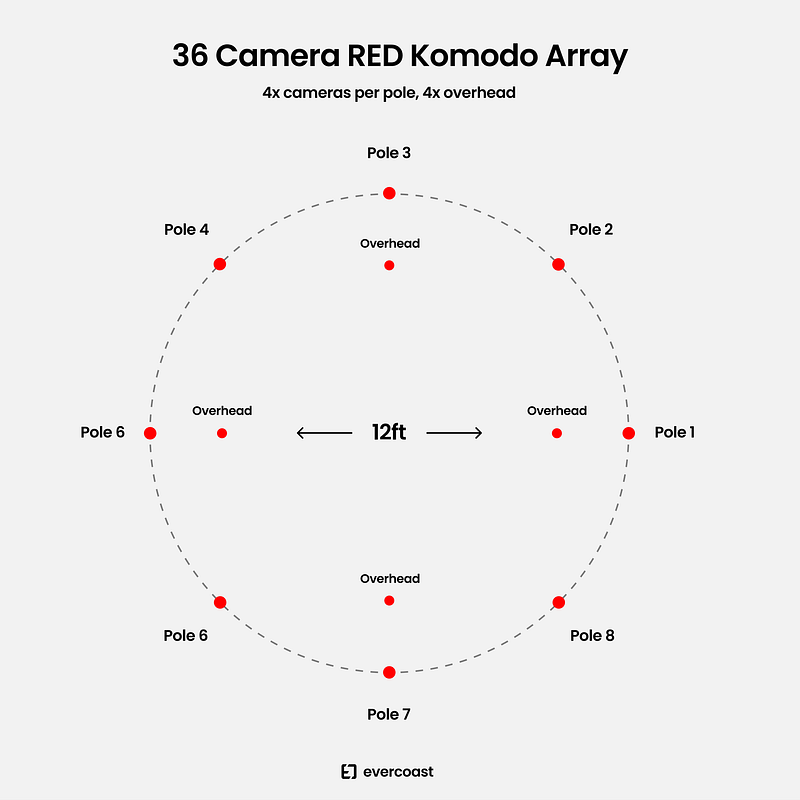

The test rig consisted of 36 RED KOMODO cameras arranged to fully cover a 360-degree capture volume for complete human performance capture.

Capture Volume

Diameter: 12 feet (3.66 meters)

Vertical Poles: 8 evenly spaced, each supporting 4 cameras at staggered heights: 1 ft, 3 ft, 5 ft, and 7 ft

Overhead Coverage: 4 additional cameras mounted at 10 ft for top-down coverage, eliminating blind spots.

This layout was designed to provide maximum coverage with significant overlap, a critical factor for robust 4D reconstruction.

Camera Configuration

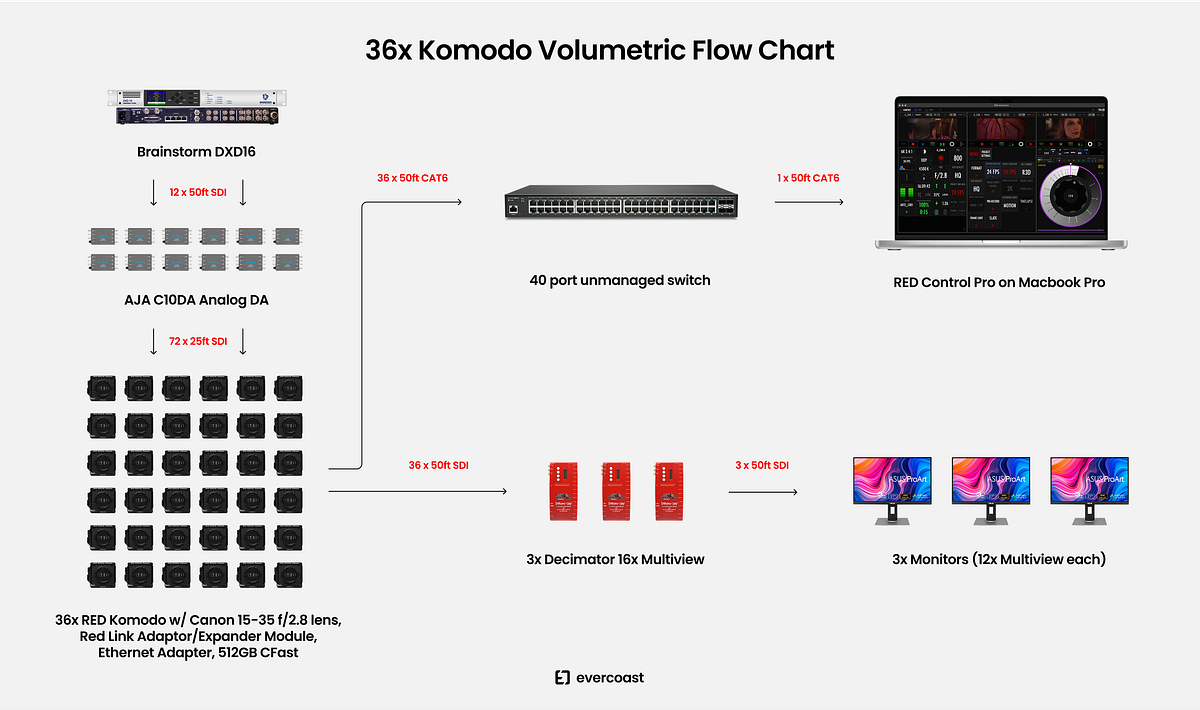

Each KOMODO was outfitted with a straightforward kit:

Lens: Canon RF 15–35mm f/2.8 zoom

Settings: 4K/17:9, 30FPS, 1/240 shutter, ISO 800

Modules: RED KOMODO Expander Module and Link Adapter

Media: Recorded R3D LQ to RED PRO 512GB CFast card

Connectivity: USB-C to Ethernet dongle for network control

Monitoring & Sync:

3× 12G-SDI cables per camera: video out, genlock in, timecode in

Brainstorm DXD-16 and AJA C10DA units for distributing genlock and timecode

Decimator DMON-16S multiviewers feeding three monitors for live camera feeds

Networking & Control

A basic network was established using CAT-6A cabling and an unmanaged switch.

Each camera was connected via the RED KOMODO Link Adaptor to a central switch, which fed into a MacBook Pro running RED Control Pro. RED Control Pro enabled:

Simultaneous start/stop of all cameras

Basic remote camera adjustments

Lens control for focus, iris, and zoom

This setup provided only baseline functionality. In future iterations, Evercoast’s Mavericks software will handle full array control, monitoring, and data management with a much cleaner IP-based network workflow through RED Connect.

Workflow

The workflow followed four key stages:

Capture: Cameras recorded synchronized footage using hardwired genlock and timecode to maintain exact frame alignment.

Data Offload: Footage was ingested from CFast cards and organized into a master dataset. Clips were trimmed to precise overlapping frame ranges with the CLI REDLine application and converted into Evercoast’s take format.

Cloudbreak Upload: Trimmed takes were uploaded into Cloudbreak, Evercoast’s spatial rendering platform. Cloudbreak streamlined distributed rendering and storage management.

Gaussian Splat Reconstruction: Evercoast’s Gaussian splatting implementation processed the data across multiple GPU-accelerated cloud instances hosted on AWS.

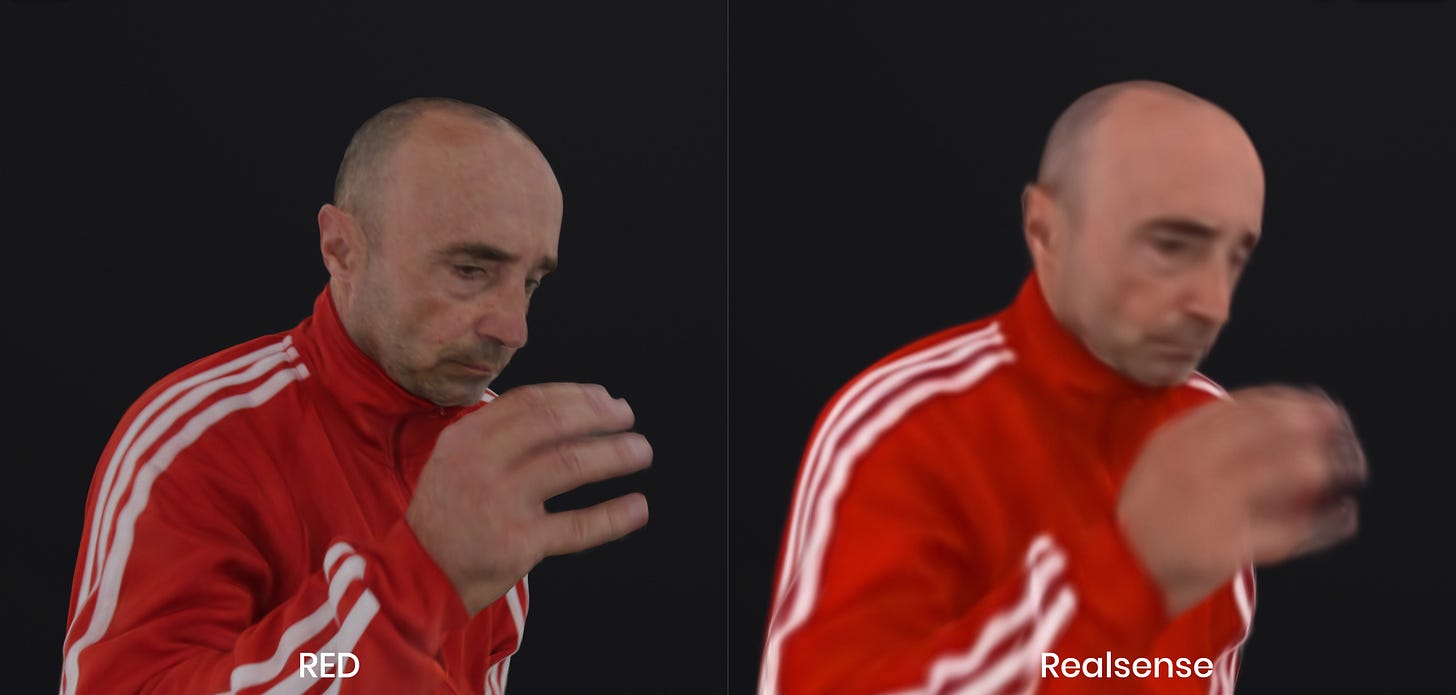

Comparing RED and RealSense

It may seem obvious to say, “of course a RED camera will outperform a RealSense for this kind of capture.” And yes — one is a $400 depth camera and the other is a $4,000 cinema camera. But cost alone doesn’t determine the right tool for a job. There are practical trade-offs around setup complexity, data volume, workflow efficiency, calibration, and rendering performance — and budget. A controlled technical comparison helps clarify not just that the RED performs better, but how and why, and whether the gains justify the additional hardware.

To provide a direct, side-by-side evaluation, both camera arrays were mounted and operated simultaneously within the same capture volume:

RED KOMODO Array: 36 cameras in a full 360° configuration

RealSense D455 Array: 29 cameras arranged for comprehensive coverage on the same pole positions

Each array recorded independently using its own workflow, with separate datasets managed, uploaded, and rendered through Cloudbreak. This allowed for a true apples-to-apples comparison without any overlap or blending of data.

Independent Reconstruction

The RED and RealSense datasets were processed exclusively and independently through Evercoast’s Gaussian splatting pipeline, ensuring that differences in output were driven purely by camera quality and capture fidelity, not workflow variations.

Quick Test — Big Insights

Although this rig was built quickly and using a more manual workflow, it did exactly what we wanted to do and that was to validate the viability of KOMODO cameras for professional volumetric capture.

Key Takeaways:

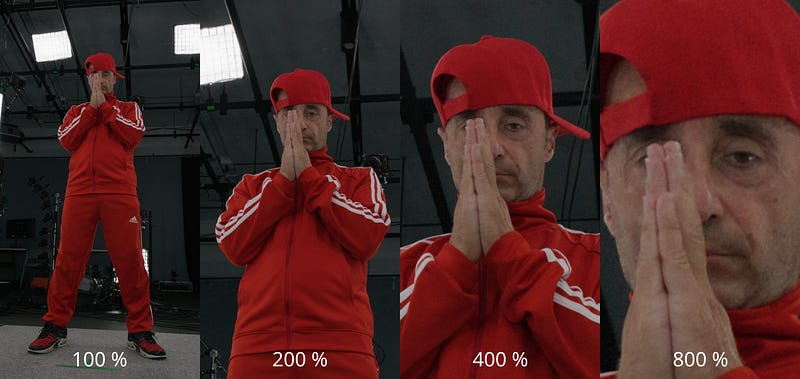

The image quality was exceptional, delivering the dynamic range and resolution needed for premium content.

RED cameras proved capable of precise multi-camera synchronization, a critical requirement for volumetric capture.

The workflow revealed where future integrations with Mavericks and RED Connect will dramatically simplify setup and operation.

The KOMODO captures retained subtle textures like skin pores, fabric weave, and hair strands, which were far less defined in the RealSense outputs.

RED footage provided richer dynamic range and accurate color reproduction without banding or noise.

The KOMODO array relied solely on monocular image data, eliminating the need for stereo depth pairs. Despite lacking dedicated depth sensors, the volumetric reconstructions were significantly cleaner and more stable.

Motion tearing, jitter, and occlusion errors common in lower-cost cameras were virtually eliminated in the RED dataset.

We expected these results, but it’s always great to see expectations confirmed through real-world testing.

What’s Next

The next phase of our efforts will focus on creating a production-ready, turnkey, and easy-to-operate system, with:

Centralized IP streaming via RED Connect

Full array management through Mavericks

Simplified cabling and hardware architecture

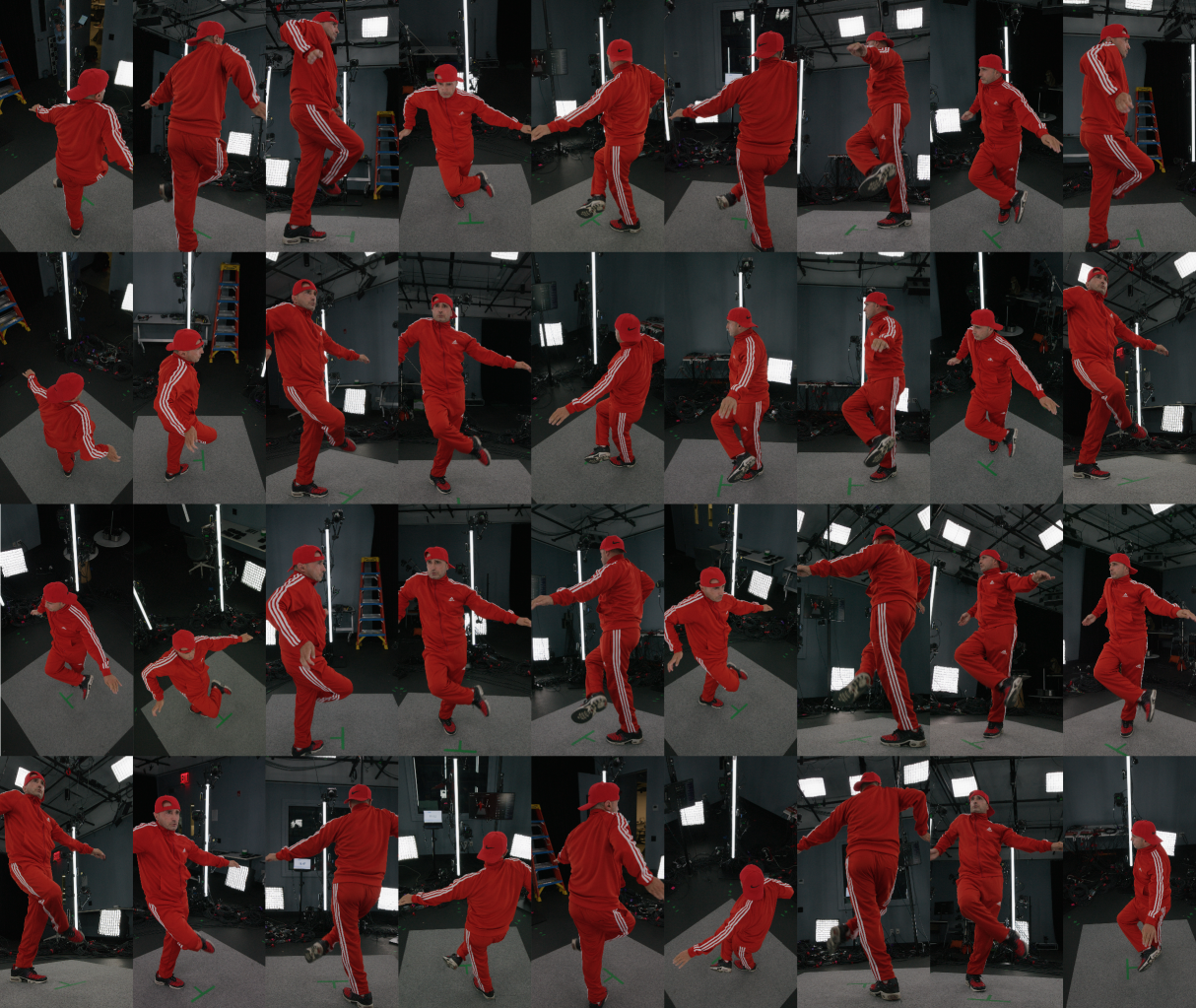

Sample Outputs

The result: photorealistic 4D sequences with true cinematic detail. The sample outputs from this test showcase:

High-detail facial capture and natural expressions

Smooth, artifact-free motion

True-to-life textures and lighting

Conclusion

We absolutely validated that RED KOMODO cameras, paired with Evercoast’s Gaussian splatting pipeline, can achieve best-in-class volumetric capture for the entertainment industry.

While the rig was deliberately built as a test, the insights gained will guide a streamlined, user-friendly system.

With full integration into Mavericks and RED Connect, future deployments will feature seamless IP-based control, recording, and live preview — bringing cinematic volumetric capture to a new level of quality and efficiency.